Authority if any of the queries regarding this post or website fill the following contact form thank you. chatting 5 times with beam search for step in range(5): take user input text input('> You:') encode the input and add end of string token inputids tokenizer.encode(text + tokenizer.eostoken, returntensors'pt') concatenate new user input with chat history (if there is) botinputids torch.cat(chathistoryids, inputids, dim. This tutorial is only for Educational and Learning purposes. * Split on all non-alphabetic characters */ĭisclaimer: The above Problem ( Java String Tokens ) is generated by Hackerrank but the Solution is Provided by Chase2Learn. In the list, which represents the tokenized string.S = s.trim() // so that. There were 4 sentences in the original string, and you can see there are 4 items The parser will typically combine the tokens produced by the lexer and. Then the lexer finds a ‘+’ symbol, which corresponds to a second token of type PLUS, and lastly it finds another token of type NUM. The job of the lexer is to recognize that the first characters constitute one token of type NUM. We then show the output of the sentences variable. The lexer scans the text and find ‘4’, ‘3’, ‘7’ and then the space ‘ ‘. This isĭone using the nt_tokenize() function. We then create a variable, sentences, which stores the tokenized sentences. Quotes so that the text can span multiple lines. So we have a variable, paragraph, which stores a few sentences.

#PYTHON STRING TOKEN CODE#

There are comments in the code that describe high-level what is happening. It's just like above but now we use the nt_tokenize() function. This sample app is a very simple Python application that does the following: Refreshes an existing token stored on the file system in a json file using its refreshtoken.

We now will show to tokenize sentences in a string. Once we put words in the terminal, we see a list of items with each item being Next, you will see the join() function examples to convert list to a. The above method joins all the elements present in the iterable separated by the stringtoken. We then create another variable, words, which uses the nltk.word_tokenize() function stringtoken.join( iterable ) Parameters: iterable > It could be a list of strings, characters, and numbers stringtoken > It is also a string such as a space ' ' or comma ',' etc. We then have a string stored in the string variable, 'Python is a great programming > string= 'Python is a great programming language' So below, we tokenize the string, 'Python is a great language to use for programming'

#PYTHON STRING TOKEN HOW TO#

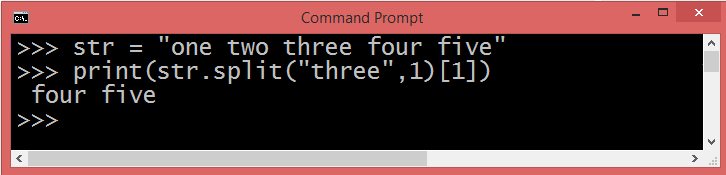

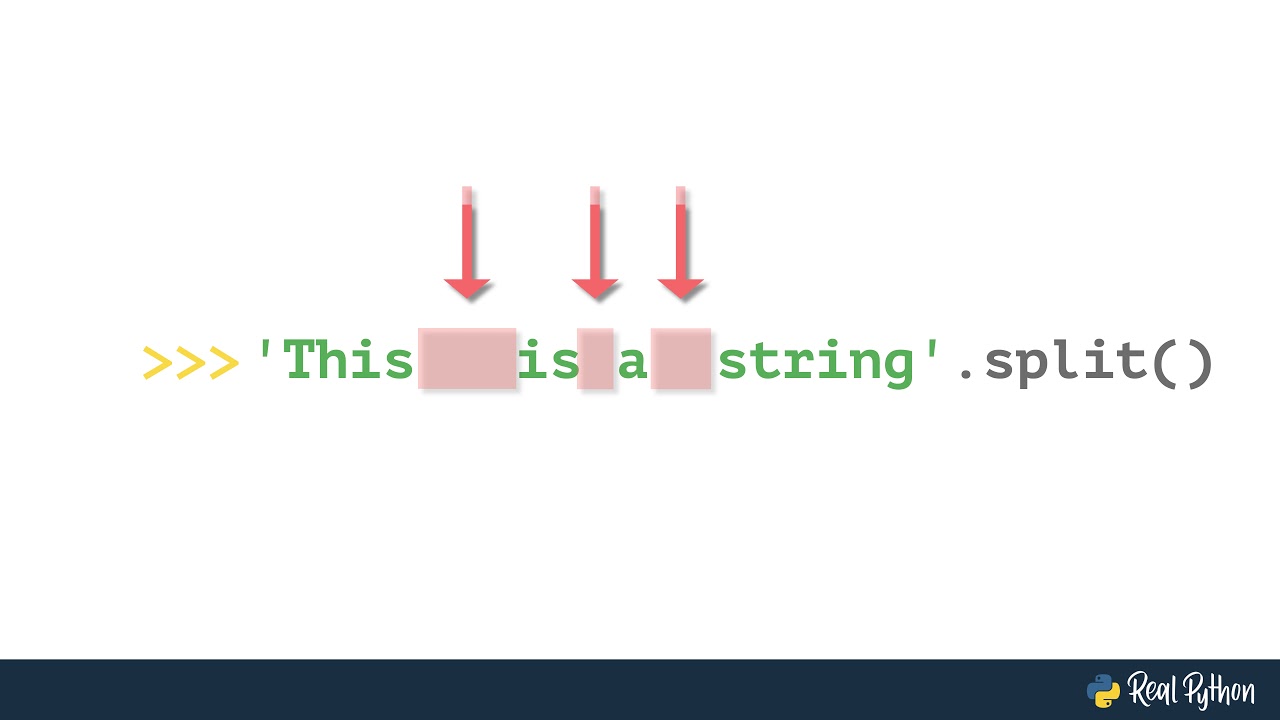

So the first thing we will show is how to tokenize words in a string. stringtoken.join( iterable ) Parameters: iterable > It could be a list of strings, characters, and numbers stringtoken > It is also a string such as a space or comma ',' etc. Is vital for natural language processing. The resultant output would be, ['The sky is blue', 'The sun is yellow, 'The clouds are white',Ī lot of natural language processing deals with the extraction of words and sentencesīeing able to extract words from sentences and extract sentences from paragraphs Item in the list being one of the sentences in the string. Python string method split() returns a list of all the words in the string, using str as the separator (splits on all whitespace if left unspecified). Pythong then puts these tokenized sentences into a list, with each Tokenizing sentences means extracting sentences from a string and having each For example, if we tokenized the string, "The grass is green", This has a usecase in many application of Machine Learning. Sometimes, while working with data, we need to perform the string tokenization of the strings that we might get as an input as list of strings. Python then puts these tokenized words into a list, with each item in the listīeing one of the words in the string. Python Tokenizing strings in list of strings. Objects of the same type share the same value. Note that location scoping only applies to internet-connected readers. The id of the location that this connection token is scoped to.

Tokenizing words means extracting words from a string and having each word A backslash does not continue a token except for string literals (i.e., tokens other than string literals cannot be split across physical lines using a. Includes code snippets and examples for our Python, Java, PHP, Node.js, Go, Ruby, and. The NLTK module is the natural language toolkit module.

In this article, we show how to tokenize a string into words or sentences in Python How to Tokenize a String into Words or Sentences in Python using the NLTK Module

0 kommentar(er)

0 kommentar(er)